A general meeting looking at possible test sites and attribute learning.

Test sites:

Wikipedia and

Maryland General are good sources of dense documents (I have used a document from MG for testing so far).

Attributes: If we can learn cardinality for an attribute, it will take one of four forms: 0..1, 1..1, 0..N, 1..N.

A fifth rule to be added to the current set: A of_IN B to create attribute A for concept B.

We can look at the use of PRPS (possessive pronoun), WPS (possessive wh-noun) and IN for further attribute learning.

Currently the system builds a taxonomy (hierarchy of words) rather than an ontology. It may be possible to use WordNet to identify children of a concept that would be better suited as a sole attribute. For example, concept "car" has children "green car" and "blue car". WordNet identifies "green" and "blue" as having the hypernym "colour", so "colour" becomes an attribute for "car" with possible values "green" and "blue".

Web crawling issues: My system does not handle frames or #anchor links intelligently (the former is ignored, the latter would fetch the same page many times)

The system should allow for the specification of a maximum depth in crawling, or for a maximum number of pages to parse.

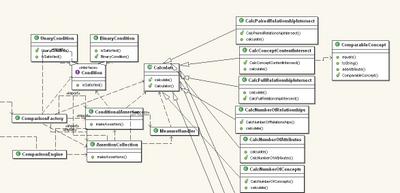

Potential application of system: Document version comparison. By adding meta information to an ontology for a document (such as number of words, number of concepts, number of attributes, deepest hierarchy and number of each hierarchy depth) it would be possible to see if two documents are related: if one subsumes the other or if they intersect.